Hello, everyone!

My name is Manan. I am a student at NMIMS university, pursuing a B.Tech in Data Science.

I am starting this blog in order to talk about the Data Science projects I do, the new things that I learn and much more about this field.

I have done very few and basic projects yet (like- Titanic, Housing - prices, MNIST, etc). Today, I will be talking about the one I finished recently. It was the first time that I did a project completely on my own.

The dataset I was working on is the PIMA Indians Diabetes Database. This dataset is originally from the National Institute of Diabetes and Digestive and Kidney Diseases. The objective of the dataset is to diagnostically predict whether or not a patient has diabetes, based on certain diagnostic measurements included in the dataset.

Let's get started!

Preparing the Data:

If you check the dataset, there are no missing values.

But, note that the values which were missing are not left as null but filled with zeros.

So, let's fix this for each Feature.

Age:

There are no faulty values(as in filled with 0s). We will just create a new feature, Age category.

Glucose:

There are very few faulty values. I filled it with the median of the respective Age Category.

Then I cut it into 2 categories. The values were chosen as 0 - 140 is considered the normal range.

Insulin:

It showed high correlation with Glucose. So, I have replaced the faulty values with the median of the respective Glucose Category.

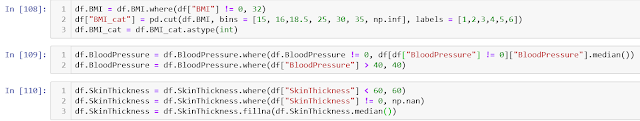

BMI, BloodPressure & SkinThickness:

The mean, mode and median are almost the same(and the std deviation was very small). So, I am replacing the faulty values with median.

Pregnancies:

I have added a new Feature by cutting pregnancies in different categories.

Final review:

Time for the fun-part!

Training Models:

First, we will divide the data in 2 parts, test size will be 0.25. I am using StratifiedShuffleSplit so that equal proportions of Outcomes are there in both train and test set.

I trained Several models and RandomForestClassifier and XGBoost gave the maximum accuracy.

RandomForest with hyperparameter tuning-

XGBoost-

The accuracy of all models:

RandomForestRegressor(after hyperparameter tuning): .0765

Logistic Regression: 0.67

K Neighbours Classifier: 0.708

Gaussian Naive Baye's: 0.713

Caliberated Classifier: 0.713

XG Boost: 0.765

Perceptron: 0.677

The authors say that the maximum accuracy that can be achieved is 0.76. (source: https://www.kaggle.com/uciml/pima-indians-diabetes-database/discussion/24180)

So, yay! We're good!

But...

If you want to try and get a better score, delete the outliers and the accuracy rises up to 0.82.

links:

pima dataset on Kaggle- https://www.kaggle.com/uciml/pima-indians-diabetes-database

entire notebook on my GitHub-

https://github.com/mananjhaveri/PythonNotebooks_DS/blob/master/pima_diabetes.ipynb

Comments

Post a Comment